Key Takeaways

- Hybrid architectures balance performance and cost. By keeping GPUs focused on inference and offloading orchestration and preprocessing to Lambda, enterprises get elasticity without constant GPU burn.

- Best suited for bursty workloads. Use cases like fraud detection, claims processing, and SaaS tenant management benefit most when traffic patterns are unpredictable.

- Integration challenges are real. Cold starts, data transfer overhead, and debugging across distributed layers can erode the efficiency gains if not managed.

- Externalized state is non-negotiable. Trying to store state inside Lambda or GPU containers breaks scalability—Redis, DynamoDB, or vector DBs are the right tools.

- Observability and workload profiling drive ROI. Without careful monitoring of GPU utilization and Lambda overhead, cost surprises can outweigh savings.

When enterprises talk about “agentic AI” systems today, two themes almost always collide: performance and cost. Everyone wants millisecond-level inference, but few are willing to pay for GPU clusters that sit idle when workloads fluctuate. That’s the paradox—especially in agentic systems, where traffic patterns spike unpredictably.

This is why hybrid architectures, where GPU inference nodes are paired with serverless functions like AWS Lambda, have started showing up in serious conversations. It’s not just a theoretical design pattern.

There have been startups in healthcare and banks experimenting with it because they want elasticity without re-architecting their whole stack. Done right, the combination gives you the acceleration of NVIDIA GPUs and the pay-as-you-go model of serverless. Done poorly, you get a Frankenstein system riddled with cold starts, latency gaps, and debugging headaches.

Also read: Leveraging NVIDIA’s AI stack (CUDA, TensorRT, Jetson) for real‑time smart agent deployment

Why Pair GPUs with Serverless at All?

On the surface, GPUs and Lambda look like odd companions. One is built for high-throughput, parallelized inference. The other is designed for tiny, ephemeral workloads. But when you break down real enterprise usage, the logic becomes clearer:

- Agentic workflows aren’t steady. A digital agent might be idle most of the day, then suddenly process 10,000 claims when a hospital uploads a batch of records. Scaling GPU clusters up and down in perfect sync with that isn’t trivial.

- Serverless absorbs orchestration overhead. Lambda can handle request parsing, lightweight preprocessing, authentication, or fan-out logic without touching the GPU. Why waste a $2/hour GPU instance on string manipulation?

- Cost distribution becomes surgical. Instead of running inference servers 24/7, you pin only the heavy lifting to GPU nodes. Everything else—glue code, I/O transforms, retries—flows through Lambda’s per-execution billing.

The Moving Parts

To make this more concrete, let’s map the flow of an agent request through such a hybrid stack:

- Request lands in API Gateway → triggers a Lambda function.

- Lambda performs preprocessing (tokenization, enrichment, and routing decisions).

- Lambda dispatches an inference call to a GPU-backed container (e.g., deployed on ECS with NVIDIA Triton).

- The GPU container runs inference—this is where the model crunches.

- Result returned to Lambda for post-processing, error handling, and structured output.

- Lambda sends the final response back through API Gateway.

Notice how the GPU is kept laser-focused on inference. Everything else—the messy parts that vary with client, workflow, or compliance—stays in Lambda.

Where It Works Properly

Certain patterns benefit disproportionately from GPU + Lambda hybrids.

Spiky workloads

Fraud detection systems that only light up during high transaction volumes. You can’t justify an always-on GPU farm for a bank that processes bursts in specific time zones

Multi-tenant SaaS

Startups hosting AI features for hundreds of customers. One customer sends nothing all week, another sends a flood on Friday night. Lambda absorbs routing, queuing, and per-tenant accounting.

Edge-to-cloud bridging

When data streams from Jetson devices or IoT sensors, Lambdas can filter noise and only forward relevant payloads to GPU inference nodes. Saves bandwidth and GPU cycles.

Compliance wrappers

In industries like healthcare, Lambdas can run lightweight PHI scrubbing or audit logging before and after inference. The GPU container never sees raw sensitive data.

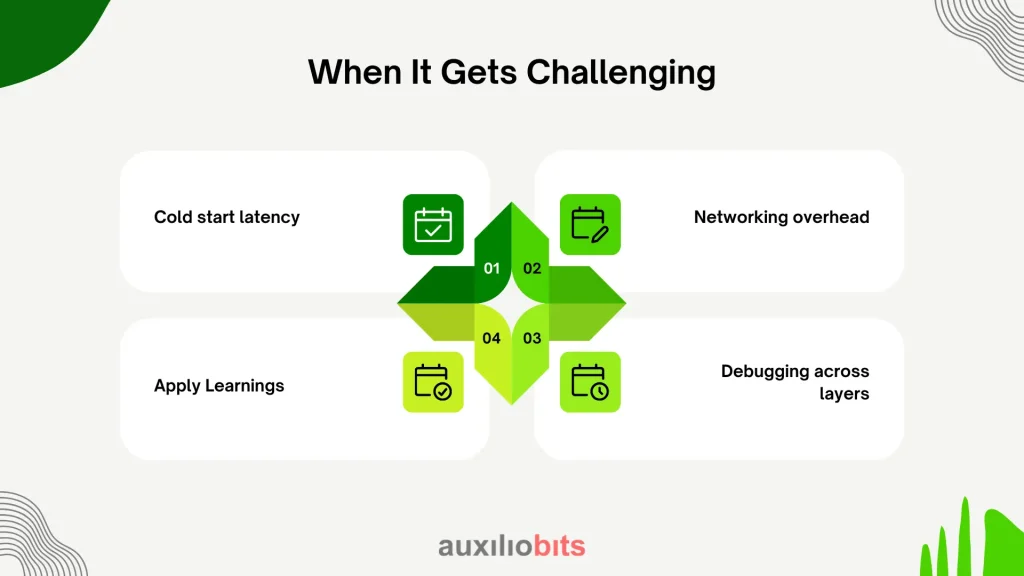

When It Gets Challenging

Every architecture has weak spots. In this case, the friction usually appears at integration boundaries.

- Cold start latency. A Lambda cold start might add 200 ms or more. For conversational agents where users expect near-instant responses, that’s painful. There are tricks (provisioned concurrency, SnapStart), but they don’t fully erase it.

- Networking overhead. Moving data between Lambda and GPU containers isn’t free. If you’re shuttling large embeddings or images, serialization and transfer can dominate compute time.

- Debugging across layers. Problems don’t stay isolated. A malformed JSON in Lambda might look like a GPU bug. Distributed tracing tools help, but teams often underestimate the ops burden.

- Cost surprises. Ironically, teams chasing cost efficiency sometimes overspend. Lambdas with heavy data transfer or memory use can rival GPU costs if misconfigured.

NVIDIA’s Role

It’s tempting to reduce NVIDIA’s role to “selling GPUs,” but in hybrid stacks, their software stack matters as much as the silicon.

- Triton Inference Server: Deployable in ECS/EKS, it simplifies multi-model serving and integrates neatly with gRPC/HTTP interfaces that Lambdas can call.

- TensorRT optimizations: Crucial when squeezing latency out of LLM inference. Without it, GPU cycles get wasted.

- CUDA integration with deep learning frameworks: Makes it viable to run specialized models (say, custom healthcare NLP) without rewriting half the stack.

AWS’s Role: Beyond Lambda

Although Lambda is the headline player here, AWS has several supporting services that make hybrids realistic.

- API Gateway handles request entry, throttling, and routing.

- EventBridge can trigger Lambdas for asynchronous tasks (useful for batch inference).

- ECS/EKS with GPU instances hosts the inference servers themselves.

- Step Functions orchestrate multi-step workflows that need both Lambdas and GPU calls.

Some teams even route GPU inference through AWS SageMaker endpoints, though in practice, SageMaker is heavier and less flexible than Triton/ECS for high-frequency workloads.

An Architectural Decision: Where to Keep State

Agentic systems often need memory—conversations, context, and history. That doesn’t fit neatly into either Lambda or GPU inference nodes, since both are designed to be stateless. So where do you store it?

- Redis or DynamoDB are common choices for session memory.

- Vector databases (like Pinecone or pgvector on RDS) hold embeddings.

- S3 acts as cheap cold storage for artifacts or logs.

Some architects try to shoehorn state into Lambda layers or GPU containers. It almost always backfires, leading to sticky sessions and scaling headaches. The hybrid pattern really only shines when you externalize state cleanly.

Real-World Example: Claims Processing

A healthcare insurer needed an AI agent that read claim documents, extracted structured fields, and flagged anomalies. Their workloads were bursty—hundreds of claims at 3 am, then nothing for hours

- Lambda did intake: It validated document formats, scrubbed PHI, and batched payloads.

- The GPU container ran inference: Using an OCR + NLP model stack optimized with TensorRT.

- Post-processing Lambda: Translated model output into structured JSON and wrote to DynamoDB.

The outcome? GPU utilization shot up from 15% (when everything ran on monolithic GPU servers) to 65%. They slashed costs by 40% because idle GPU hours nearly disappeared. The tradeoff was occasional latency spikes from Lambda cold starts—but for a back-office claims system, that was acceptable.

Tradeoffs Worth Calling Out

Hybrid GPU + Lambda setups are attractive, but they’re not a universal recipe. Some nuanced tradeoffs matter:

- Elasticity vs. predictability: If your workload is predictable (say, nightly batch processing), reserved GPU nodes might be cheaper than orchestrating Lambdas.

- Latency vs cost: Lambdas add hops. For ultra-low latency (financial trading, conversational chatbots), sometimes a pure GPU service is cleaner.

- Operational complexity vs modularity: Hybrid systems are harder to debug but easier to evolve. If you expect your agent workflows to change monthly, modularity pays off.

Practical Guidelines for Teams Considering This

Some lessons learned—often the hard way—by teams that built such hybrids:

- Start by profiling workloads. Know how often inference happens, how big the payloads are, and what the latency budget is.

- Don’t put heavy lifting in Lambda. Keep it lean—routing, light preprocessing, not ML itself.

- Use async patterns when possible. EventBridge + SQS + Lambda → GPU works better for batch tasks than synchronous API calls.

- Benchmark GPU utilization. Idle GPUs are where money burns; keep them saturated during spikes.

- Invest in observability. Tracing across API Gateway, Lambda, ECS, and GPU logs isn’t optional.

Where This Might Be Heading

The lines between GPU and serverless worlds are already blurring. NVIDIA is pushing for “serverless inference” with Triton integrations. AWS is experimenting with GPU-accelerated serverless (though not quite mainstream yet). It wouldn’t shock me if, in a few years, Lambda itself could invoke GPU kernels under the hood.

Until then, hybrids remain a transitional pattern—powerful, slightly awkward, but cost-saving in the right hands

Conclusion

Hybrid GPU + serverless designs aren’t a silver bullet, but they represent a pragmatic step forward in the evolution of agentic AI infrastructure. Enterprises no longer have to choose between overpaying for idle GPU clusters and underdelivering on performance. Instead, they can selectively fuse NVIDIA’s acceleration stack with AWS’s serverless ecosystem to build systems that flex with demand.

That said, success depends on discipline: keeping Lambda lightweight, externalizing state, and investing early in observability. Teams that underestimate the operational complexity often find themselves drowning in cold starts and network bottlenecks. Teams that get it right, however, unlock a level of cost efficiency and elasticity that pure GPU or pure serverless architectures rarely achieve.

For now, hybrids remain transitional—powerful, sometimes awkward, but increasingly relevant. And as AWS experiments with GPU-accelerated serverless and NVIDIA pushes serverless inference natively, the distinction between “GPU clusters” and “Lambda functions” may blur entirely. Until then, hybrid thinking gives agentic AI builders an edge: speed when it matters, savings when it doesn’t.