Key Takeaways

- Bedrock’s strength is abstraction, but subtle differences across hosted models can break assumptions.

- CodeWhisperer adds practical glue, bridging agent reasoning, and executable AWS automation.

- Custom LLMs embed business rules, yet their lifecycle management (drift, retraining, monitoring) is the real challenge.

- Friction points—latency, context limits, AWS lock-in—must be accounted for early in design.

- Agents thrive in bounded, high-value workflows, not in long-horizon strategy or unstructured problem spaces.

Autonomous agents are the current hot topic in enterprise tech strategy meetings, but beyond the buzzword, there’s a serious architectural discussion happening. Enterprises are now integrating decision-making systems into their workflows, moving far beyond simple chatbots. AWS is strategically positioning its AI services, including Bedrock and CodeWhisperer, as foundational tools for developing these sophisticated systems.

Also read: How to Choose Between Autonomous and Human-in-the-Loop Agents

Why AWS Bedrock Is Showing Up in Agentic Architectures

Bedrock’s pitch is almost too neat: “Use foundation models from multiple providers without worrying about infrastructure.” For enterprises used to weeks of GPU provisioning requests, it’s a welcome relief.

But Bedrock isn’t just about convenience. What makes it attractive for agent design is abstraction without lock-in. You can swap out an Anthropic model for a Cohere one, or pull in a Titan variant when you need tighter AWS integration. In other words, your agent isn’t married to a single model vendor.

The nuance? Bedrock isn’t as plug-and-play as the marketing suggests. Model parity is inconsistent; capabilities differ in subtle ways. An agent built with Claude for reasoning might fail when switched to Titan due to differences in token limits or tool-use reliability. I’ve seen prototypes collapse because someone assumed “all models in Bedrock behave the same.” They don’t.

That being said, if you’re aiming to orchestrate multiple agents, Bedrock provides a neutral ground. It handles scaling, quota management, and compliance. And in regulated industries, that last one isn’t trivial—CIOs like knowing the legal team can find the vendor names in a dropdown instead of chasing a new open-source repo every week.

CodeWhisperer in the Agent Toolkit

CodeWhisperer doesn’t usually show up in conversations about autonomous agents, but it should. Here’s why: most enterprise agents need runtime adaptability. They’re not just answering questions—they’re writing SQL, crafting ETL jobs, or generating snippets that plug into proprietary systems.

That’s where CodeWhisperer fits. It can:

- Suggest domain-specific code patterns that align with AWS APIs.

- Generate glue logic between services—think Lambda functions, Step Functions definitions, or DynamoDB queries.

- Serve as a guardrail by nudging developers toward AWS-native implementations (sometimes a blessing, sometimes a nudge toward more lock-in).

Of course, CodeWhisperer has blind spots. It’s heavily optimized for AWS contexts, which is fine if your stack is already deep in the ecosystem. If not, you’ll hit friction—especially with hybrid architectures where agents interact with systems like SAP, Salesforce, or on-prem data lakes. In those cases, CodeWhisperer’s “helpfulness” becomes more of a gentle suggestion than a working solution.

Still, for operationalizing agents in production pipelines, I’ve seen teams use CodeWhisperer as a scaffolding layer. Agents generate pseudo-code or abstract workflow definitions, and then CodeWhisperer refines that into actual deployable Lambda code. Is it perfect? No. But it accelerates the handoff between abstract reasoning and executable automation.

The Role of Custom LLMs

If Bedrock gives you the marketplace and CodeWhisperer gives you the glue, custom LLMs give you agency specificity. No enterprise really wants to expose raw foundation models to their operations. Why? Because generic models hallucinate. They don’t understand your policies, your jargon, or your compliance requirements.

Custom fine-tuning (whether through Bedrock’s customization options or running your own Hugging Face stack on SageMaker) solves three practical issues:

- Context compression: Instead of dumping 20 pages of SOPs into a prompt, a fine-tuned model internalizes that behavior.

- Guardrails: You can encode policy constraints directly into the model’s weights, reducing reliance on brittle prompt templates.

- Efficiency: Custom LLMs often require fewer tokens per interaction, which matters when your bill is tied to token usage.

But here’s the catch: enterprises tend to underestimate the MLOps overhead. Training is the easy part. Ongoing monitoring for drift, evaluating against changing compliance rules, and deciding when to retrain—those are the headaches. I’ve seen projects stall not because the model was bad, but because nobody budgeted for the governance layer.

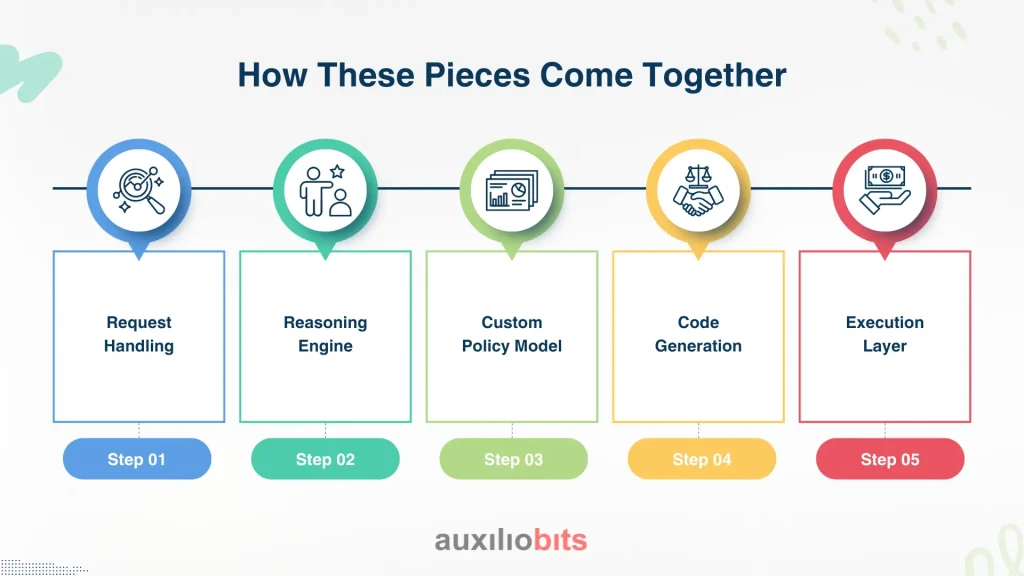

How These Pieces Come Together

So how do Bedrock, CodeWhisperer, and custom models actually assemble into an autonomous agent? Let me sketch a typical flow that is common in a real-world finance deployment

- Request Handling—An employee asks an agent to reconcile transactions across two systems.

- Reasoning Engine—The agent uses a Bedrock-hosted foundation model to parse intent and plan steps.

- Custom Policy Model—Before execution, a fine-tuned model (trained on compliance data) checks if the planned steps violate any internal rules.

- Code Generation—The agent drafts a script to query DynamoDB and format the reconciliation output. CodeWhisperer refines it into a Lambda function.

- Execution Layer—The Lambda runs, results are piped back, and the agent summarizes the outcome for the employee.

The Hidden Frictions

It’s tempting to paint this stack as clean and elegant. In practice, three frictions repeatedly show up:

- Latency: Bedrock’s hosted models introduce non-trivial round-trip times. In workflows requiring sub-second responses, this is a killer.

- Context limits: Even with fine-tuning, you can’t escape context window ceilings. Complex, multi-system workflows hit those walls fast.

- AWS ecosystem gravity: While Bedrock advertises choice, once CodeWhisperer and Lambda are in the mix, you’re increasingly committed to AWS patterns. That’s not inherently bad, but it’s a strategic decision—not one to stumble into.

Where It Actually Works Well

This triad shines in a few areas:

- Customer service triage: Agents can escalate or resolve tickets by integrating with CRM data and generating scripts on the fly.

- Financial compliance checks: Custom LLMs ensure rules are applied consistently, while Bedrock handles the language parsing.

- Data engineering automation: CodeWhisperer plus Bedrock reasoning creates pipelines without manual hand-coding for every edge case.

Notice what these have in common: bounded tasks with clear inputs and outputs. That’s the sweet spot for agentic systems today.

When It Falls Apart

And where does it stumble? Anywhere requiring long-horizon planning or open-ended reasoning. For example:

- Multi-quarter demand forecasting that needs macroeconomic data mixed with internal sales figures.

- Complex negotiations or contract drafting where nuance trumps speed.

- Situations where agents need to maintain persistent context across weeks or months.

These aren’t just technical gaps—they’re fundamental limits of today’s LLM architectures. No amount of CodeWhisperer glue will solve it.

Bedrock Isn’t Enough on Its Own

There’s a tendency in enterprise circles to treat Bedrock as the “answer” to autonomous agents. It isn’t. Bedrock is plumbing. Valuable plumbing, but still plumbing. The intelligence lives in the orchestration logic, the custom models, and the guardrails you build on top.

In other words, if your strategy slide says, “We’ll just use Bedrock for agents,” you’re underestimating the engineering required.

Building for Maintainability

One final note that often gets overlooked: autonomous agents aren’t static deployments. They evolve. Regulations shift, APIs change, data distributions drift. Designing with replaceable components is the only sane approach.

- Swap out models in Bedrock when performance changes.

- Retrain custom LLMs quarterly to reflect updated policy.

- Rotate CodeWhisperer-generated code through human review cycles.

Agents aren’t products; they’re ongoing programs. The AWS stack helps, but it doesn’t absolve you from lifecycle management.

Conclusion

Building autonomous agents on AWS isn’t about chasing buzzwords or flipping a switch in Bedrock. It’s about carefully assembling services that play well together while recognizing their limits. Bedrock offers flexibility but doesn’t remove the need for orchestration discipline. CodeWhisperer accelerates code integration but shines mostly in AWS-heavy environments. And custom LLMs are where domain expertise truly lives—though they demand an ongoing governance commitment that many teams underestimate.

If there’s one pattern worth noting, it’s this: success comes when enterprises treat agents as evolving systems, not finished products. The AWS stack reduces the operational burden, but responsibility for reliability, compliance, and adaptability remains squarely in the hands of the builders.