Key Takeaways

- Voice assistants work best when supporting micro-actions (e.g., retrieving a specific lab result) rather than full conversational workflows.

- Context-awareness and tight EHR integration are the real differentiators—generic voice dictation tools rarely succeed in clinical settings.

- Physician adoption grows only when the assistant demonstrates explicit, measurable time savings during real-world use.

- Poorly designed voice systems (too chatty, too rigid, or linguistically tone-deaf) quickly lose clinician trust and get abandoned.

- The next evolution is toward agentic voice assistants that not only execute single commands, but also monitor patient data and surface proactive recommendations.

Smart Voice Assistants for Doctors: Retrieve Charts, Update Notes, Send Orders

It’s almost strange how long it’s taken for hospitals and clinics to adopt voice interfaces in a meaningful way. We have consumer-grade voice assistants switching off living room lights, but a surgeon still has to scrub out or ask a nurse to pull up a CT scan. “Alexa, show me the last lab values” should have been a reality ten years ago—but wasn’t, for a mix of regulatory, integration, and cultural reasons. That tide is changing, albeit slowly and unevenly.

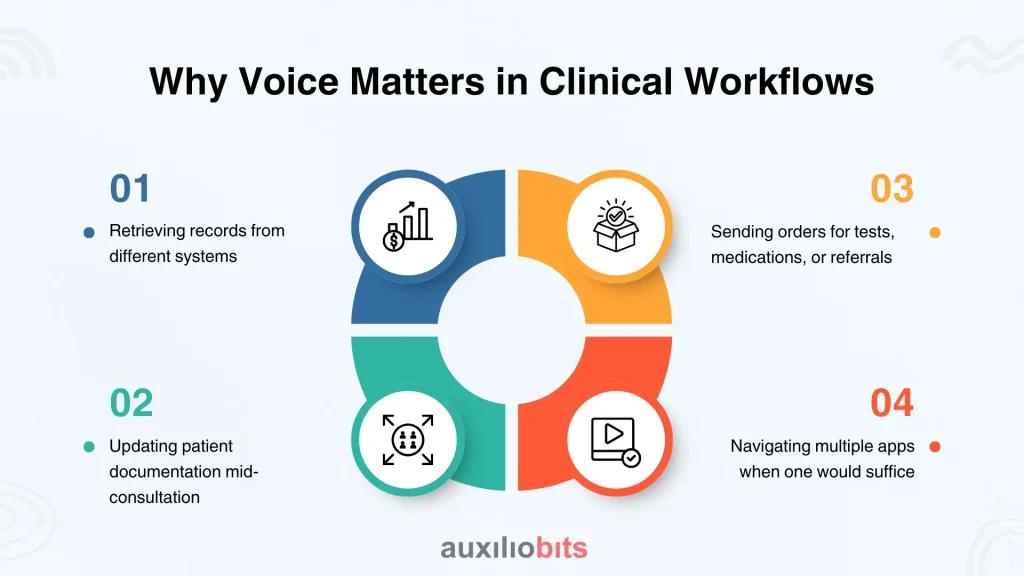

Why Voice Matters in Clinical Workflows

A physician’s day is a constant patchwork of small but context-switching tasks:

- Retrieving records from different systems

- Updating patient documentation mid-consultation

- Sending orders for tests, medications, or referrals

- Navigating multiple apps when one would suffice

These fragments accumulate. Even the most efficient EHR user loses 30–40 minutes per day to navigation and manual data entry. Voice assistants, when properly designed, remove friction in the most critical moments—especially when the user’s hands are occupied.

There’s a catch, though. A naïve voice assistant produces more frustration than value. Many early pilots failed because they tried to replace too much (long-form dictation, full EHR browsing) instead of focusing on precise micro-actions. Physicians don’t want to “converse” with software. They want to command it.

Also read: How Autonomous Agents Interact with Legacy Systems via Voice

Three High-Value Use Cases

1. Retrieve Charts Without Breaking Focus

In practice, a physician rarely needs an entire record. They need the last potassium result, the previous ECG, or the discharge summary from cardiology.

Bad voice design tries to ask, “Which part of the chart would you like?”

Good voice design understands context: What diagnosis are we working with? What screen is open? What is frequently retrieved in this scenario?

Real usage sounds like:

- “Show me her last two imaging studies.”

- “Bring up the cardiology discharge note.”

- “What was the last CRP value?”

The assistant must parse not only the command but also the clinical context (ICD codes, current encounter type, specialty). Otherwise, it becomes another workflow step.

2. Update Notes in the Middle of a Conversation

Voice is not a replacement for structured documentation. It’s an augmentation for insert-and-annotate actions during the examination itself.

For example:

- “Add that the patient reports intermittent stabbing pain behind the right shoulder.”

- “Update physical exam: mild crackles on auscultation.”

A notes assistant becomes genuinely useful when it automatically tags the input in the appropriate section (Subjective, Objective, etc.) and attaches a timestamp. One orthopedic team in Minnesota shortened its average exam by 5 minutes by using voice for micro-updates, not for the entire note.

3. Send Orders Instantly—At the Moment of Decision

Order entry is one of the most interrupted tasks. A clinician is ready to order a chest CT, then gets pulled into another thought because the order screen is four clicks away. If you allow them to say, “Order CT chest with contrast,” while still reviewing vitals, that cognitive link remains intact.

But nuance matters. The assistant should respond with a brief confirmation (“CT chest with contrast. Correct?”) and only proceed after the user affirms. Injecting an extra “Are you sure?” every single time leads to abandonment.

Voice assistants also need to be deeply integrated with CPOE (computerized physician order entry) systems—for compliance reasons. A standalone voice-to-text engine isn’t enough.

What Makes This Work (And What Breaks It)

What helps:

- Integration with context-aware data models (EHR APIs, FHIR services)

- Specialty tuning (orthopedics uses different vocab than oncology)

- Local accents and phoneme libraries (a Texas internist sounds different from a Mumbai cardiologist)

- Short command structures (typically 3–5 words, no more)

What hurts:

- Excessive dialog (“I’m sorry, I didn’t get that—can you repeat?”)

- Requiring clinicians to learn new grammar rules

- Lack of clinician control (no easy way to interrupt or override)

One team at a mid-sized hospital switched off voice support because the assistant kept misinterpreting “fluids” as “flu vaccine.” That one error level is enough to lose trust, especially in critical care settings.

Context-Specific Examples

| Care Setting | Voice Assistant Usage | Nuances |

| Emergency Department | “Order stat CT head.” | Must auto-mark as stat and bypass standard queues |

| ICU | “Show sedation level trends.” | Needs to aggregate data from multiple monitors |

| Outpatient Clinic | “Add note: patient requests MRI referral.” | Context-aware placement in the plan section |

Layering AI: From Rule-Based to Agentic Voice

Most current assistants are command-based: they follow predefined intents and slot filling. But the direction is very clearly moving toward agentic behaviors—where the assistant can resolve multi-step goals.

For instance: Check recent hemoglobin trends and notify me if it drops below 9 over the next 48 hours.” That’s not just a command. It’s a future condition plus a monitoring workflow. A lightweight agent (running alongside the EHR) could:

- Retrieve historic lab values

- Define a watch condition.

- Trigger alerting if the threshold is crossed.

Some of this is already happening in high-acuity units. One critical care unit in Spain has agents matching peripheral oxygen saturation with FiO2 settings and recommending ventilation changes—through voice prompts

How to Design for Adoption

Let’s be blunt: doctors are skeptical. Many enjoy complaining about EHRs—it’s almost a sport. To convince them, a voice assistant must:

- Be invisible in terms of cognitive load

- Show direct, tangible time savings (don’t say “up to 15%”—demonstrate it in a two-week pilot)

- Allow for immediate override (keyboard/mouse still works at any moment)

- Respect user preferences (one cardiologist may want “recent labs” sorted chronologically; another wants reverse chronological)

One behavioral trick that has worked well is providing real-time usage metrics back to the clinicians (“You saved 12 minutes today using voice”). It transforms the assistant from a novelty to a productivity tool.

What’s Around the Corner?

Expect to see more multimodal assistants: voice + on-screen suggestions (e.g., after a query, a side panel auto-expands showing related diagrams). Also, the next iteration will support implicit queries—interpreting patterns rather than explicit requests. For instance:

After seeing three episodes of rising blood pressure, the assistant quietly surfaces recent antihypertensive changes without being asked.

That level of proactivity blurs the line between passive assistant and active clinical agent. Some clinicians will initially hate it. Others will never go back.

Final Thought

Not every workflow deserves voice. Some are better left to structured clicks. But in high-information, low-bandwidth environments—like ICU rounds, trauma resuscitations, or outpatient consultations—the difference between searching and simply asking is everything. Sometimes the smartest technology does nothing more glamorous than shave off five seconds. And yet those five seconds, multiplied by hundreds of micro-tasks a day, define whether clinical work feels tolerable or exhausting.

Voice doesn’t need to be futuristic. It just needs to be respectful of how clinicians work.