Key Takeaways

- Planning, not scripting, is the core differentiator of autonomous agents. They decide how to solve a problem, not just what to say.

- Integration quality determines reliability. Without clean access to CRM, ticketing, and knowledge data, even the most advanced agents slip into generic replies.

- Preventive escalation handling often delivers higher ROI than the reactive phase. It’s cheaper to address dissatisfaction before the customer formally escalates.

- Decision boundaries must be clearly defined and regularly reviewed. Overly loose or overly tight constraints both lead to failure.

- Success isn’t about full automation. It’s about the agent knowing when to hand off—and doing it early, not 40 messages later.

Escalations are the part of customer service nobody likes to talk about openly—except when reporting KPIs in a boardroom. Even the most mature service organizations struggle with maintaining response consistency when the escalation queue starts growing at 3 a.m. The typical “follow-the-sun” model helped for a while, but even distributed teams misalign when rules, tone, and resolution protocols differ across geographies.

That’s exactly where autonomous agents started gaining traction: not as a gimmick, but as a viable operational buffer between the customer and the human escalation analyst.

Also read: Autonomous Agents for Container Tracking and Exception Handling

Why Escalation Handling Needs a Different Kind of Automation

Standard chatbots are designed to answer FAQs or resolve simple tasks. Escalations, on the other hand, are inherently messy and high-stakes. They contain:

- Emotion-laden communication

- Multi-step resolution trees

- Real-time dependencies on systems (CRM, knowledge base, ticketing tools)

- Context that spans days (sometimes weeks)

Trying to automate this with traditional RPA or NLP chatbots often ends up making the experience worse. A polite “Please contact our support team” message at 2:00 a.m. is not an escalation response. It’s a delay.

Autonomous agents, however, operate differently. They don’t need a predefined path; they can plan the path.

What an Autonomous Escalation Agent Does When Implemented Correctly

An effective escalation-handling agent is not just a conversational interface. It functions a bit like a dynamic system orchestrator:

- Identifies the nature of the escalation by analyzing both structured and unstructured inputs

- Plans a response workflow (pull data from CRM, check warranty rules, find previous tickets, etc.)

- Executes API-level interactions (creating/updating tickets, issuing concessions, triggering RPA bots)

- Communicates updates to the customer in natural language—incorporating previous tone and context

- Escalates to a human when business or ethical thresholds are met

Notice the order. Planning before executing is what differentiates autonomous agents from normal bots.

Reactive vs Proactive: The Important Distinction

Most teams focus on the reactive phase of escalation (“customer complains → agent responds”). But once you deploy an autonomous agent, you quickly realize something counterintuitive: the agent’s biggest value appears in the preventive phase.

Because it has full access to interaction history and internal knowledge, the agent can spot recurring dissatisfaction signals (long response times, negative sentiment trends, and repeated requests for supervisor contact). Which means it can initiate remediation before an official escalation ticket is submitted.

For example:

- Detecting a negative sentiment score from three different chat conversations in a single week → triggers a reach-out email

- Identifying a delay in delivery status and proactively offering a discount coupon

- Noticing a spike in “refund request” keywords and alerting a human escalation manager.

Is that strictly “escalation handling”? Actually, yes—it’s almost always cheaper to pre-escalate than to let the customer escalate.

Key Architectural Considerations

Deploying an autonomous escalation agent is not just about plugging a large language model into an interface. Enterprises that succeed with these agents invest in architecture first and LLM later.

1. Integration Layer

The agent needs minimum friction with:

- CRM (Salesforce, HubSpot, ServiceNow)

- Ticketing systems (Zendesk, Freshdesk, Jira)

- Knowledge bases (Confluence, SharePoint, custom DBs)

- Transactional systems (order management, billing)

Hard truth: if two of these systems aren’t integrated, the agent ends up hallucinating or looping back to human handoff 70% of the time.

2. Decision Boundaries

One of the more overlooked areas. The agent should know:

- When it can issue refunds, discounts, or credits

- When it can’t (legal, compliance, or financial approval required)

Hard-coded rules often fail here. A better approach is a hybrid: agent-triggered policies with business-rule overrides.

3. Safety Layer

No matter how well you train the model, there will be corner cases. That’s why most mature deployments include:

- A human-in-the-loop override channel

- Real-time monitoring (intent classifications, anomaly detection)

- Prompt version control

A Real Example

A global manufacturing company deployed an autonomous agent to handle customer escalations related to spare part replacements. Initially, the agent was configured to handle only Tier-1 complaints. Within four months, 38% of Tier-2 escalations were being managed autonomously. Key usage scenarios:

| Escalation Type | Autonomous Resolution? | Notes |

| Missing spare part shipment | ✅ | Agent proactively detected shipping delay and offered expedited reorder |

| Incorrect billing | ⚠️ | The agent collected the info and routed it to the finance analyst |

| Warranty dispute | ✅ | The agent pulled the warranty record and confirmed coverage |

| Equipment malfunction | ❌ | High-risk scenario; immediately routed to field technician |

The lesson wasn’t that the agent could handle everything. The real takeaway was that it knew when not to.

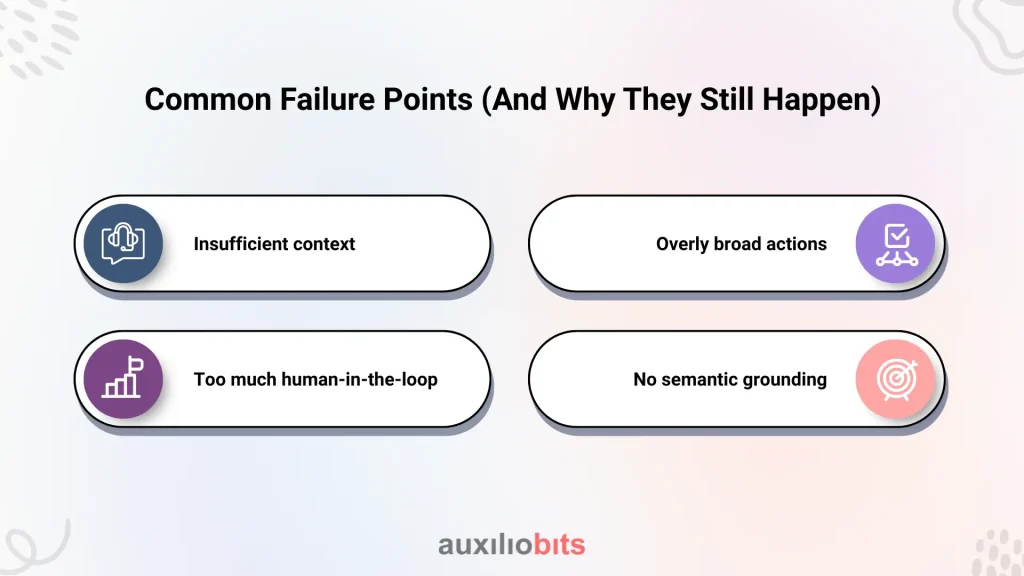

Common Failure Points (And Why They Still Happen)

Some teams still struggle—and for almost predictable reasons:

- Insufficient context: Agent receives only chat data but no CRM context → generic responses

- Overly broad actions: Agent allowed to issue partial refunds without any threshold → inconsistent business outcomes

- Too much human-in-the-loop: Agent requires manual approval for nearly everything and ends up acting like a glorified workflow engine

- No semantic grounding: Prompts rely entirely on abstract examples, ignoring actual company tone and policy nuances → production results vary wildly

Interestingly, some of the “safeguards” teams put in to protect themselves turn into bottlenecks. One large insurer added 9 approval checkpoints in the agent’s escalation flow. The result? Customer wait times increased by 56%. They reverted to only 3 checkpoints, with higher accountability on the oversight side. Response performance improved immediately.

Day-One Metrics to Monitor

Data shows that the first metrics to move are qualitative, not quantitative. Teams often realize this after the fact.

Watch for:

- Percentage of escalations that end up being handed back to humans

- Average response time after the agent takes over

- Consistency of tone and resolution structure (yes, you can measure this via clustering)

- Escalation reopen rate (an overlooked but essential metric)

- Customer satisfaction ratings for agent-managed interactions vs human-managed ones

Don’t just track resolution time. A fast but irritating response still leads to churn.

What a Mature Escalation Agent Looks Like

In organizations that have been running autonomous agents for 12+ months, the agent typically evolves into something that resembles:

- A persistent digital advisor with context memory

- A planner that continuously optimizes its workflows (retrains on successful resolutions)

- A collaborator—frequently “asking” for data or handoff when needed

Not surprisingly, these agents eventually become the main source of escalation analytics. Some organizations even built internal training programs around insights produced by the agent.

The Final Thoughts

Autonomous agents shouldn’t be seen as a way to replace people in escalation teams. Their real value is in taking care of the urgent, repetitive tasks—especially outside of normal working hours—so human experts can focus on the tricky parts that need judgment and experience. When the agent is connected to the right systems, follows clear rules, and keeps learning from real interactions, it becomes a very practical tool. The companies that succeed with these agents treat them like an extra team member on the night shift—dependable, consistent, and aligned with how the business wants to handle escalations.