Key Takeaways

- DevOps for AI demands specialized tools and practices over conventional software deployment

- Versioning should go beyond code to cover model weights, prompts, and the RAG component.

- Automated testing should assess functionality, bias, hallucinations, and performance.

- Progressive deployment strategies are necessary to counter the risks of LLM updates.

- Ongoing tracking of both technical and ethical measurements provides secure AI performance.

Integrating DevOps procedures with artificial intelligence (AI) workloads is now a key foundational element in enterprises deploying huge language models (LLMs). As AI agents shift from experimentation into production environments, the urgency of having stronger continuous integration and continuous deployment (CI/CD) pipelines increases.

Unlike other software, LLM deployments have special challenges—enormous model sizes, dynamic prompt crafting, retrieval-augmented generation (RAG) pipelines, and ethics like bias avoidance. This blog discusses how DevOps concepts are being redefined to adapt to these problems and make effective, scalable, and durable LLM deployment a reality

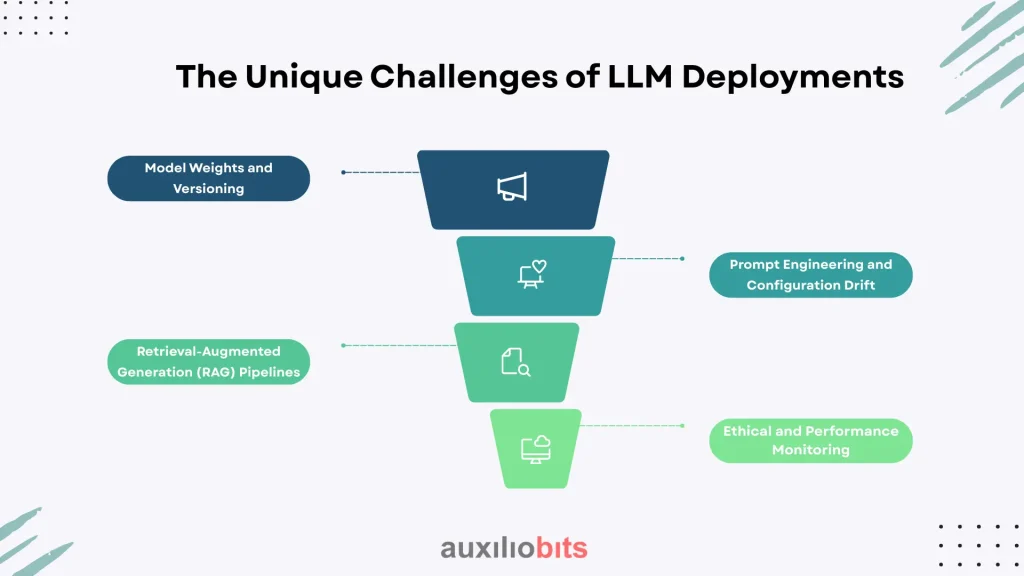

The Unique Challenges of LLM Deployments

The deployment of LLMs requires a paradigm shift in CI/CD practices. Traditional pipelines focus on code changes and binary artifacts, but LLM systems introduce multidimensional variables:

Model Weights and Versioning

LLMs like GPT-4 or Llama 3 are composed of billions of parameters stored as enormous binary files. Since the weights are not code, they need specialized version control systems. MLflow and DVC (Data Version Control) enable teams to track model checkpoints, metadata, training hyperparameters, and dataset versions. Hugging Face Model Hub, for instance, offers a centralized registry for storing and sharing model versions, which provides reproducibility across environments.

Prompt Engineering and Configuration Drift

Even minor changes in prompts or system commands can significantly alter model behavior. Poorly tested prompt updates can add hallucinations or biased output. Version-controlled repositories of prompts, with automated validation pipelines, are required to avoid configuration drift.

Retrieval-Augmented Generation (RAG) Pipelines

Most LLM use cases depend on RAG architectures, in which vector databases (such as Elasticsearch and Redis) fetch contextual information before responding. Embedding model or indexing strategy changes must be proven for accuracy and latency. For instance, updating an embedding model without retraining can adversely affect retrieval performance, leading to irrelevant output.

Ethical and Performance Monitoring

LLMs have the potential to generate misinformation or biased text. Regular monitoring for hallucination rates, fairness metrics, and response coherence is required. Tools like Evidently AI automatically detect bias, while bespoke evaluation pipelines estimate task-specific metrics like ROUGE scores in summarization.

Building a CI/CD Pipeline for LLMs

An adequately designed CI/CD pipeline for LLMs combines four essential phases: version control, test automation, continuous integration, and incremental deployment.

Version Control: Going beyond Code

LLM pipelines demand fine-grained tracking of:

- Model weights associated with training datasets and hyperparameters

- Prompts in structured repositories (e.g., YAML files) with semantic versioning

- RAG components, such as vector database schemas and embedding models

- Tools such as DVC and Weights & Biases facilitate this by versioning big files externally while keeping Git-based metadata intact. For instance, a pipeline could point to a saved model checkpoint in Amazon S3 through a DVC-controlled pointer file, facilitating lightweight versioning.

Automated Testing: From Unit Tests to Bias Detection

- Testing LLMs requires multi-level validation:

- Functional Testing: Check fundamental input-output correctness

- Benchmark Evaluation: Assess performance against carefully curated datasets

- Bias and Hallucination Checks: Use classifiers to flag toxic content or factual errors

- Latency and Cost Profiling: Confirm inference latency and cloud expense

CI pipelines for LLMs streamline

- Training models on refreshed datasets via Kubernetes-managed GPU clusters

- Model, prompt, and dependency containerization into Docker images

- Regression testing to identify performance degradation

- Progressive Deployment: Reducing Risk

- LLM updates are rolled out stepwise to reduce disruption:

- Canary Releases: Send 5% of production traffic to the new model and observe metrics

- A/B Testing: Measure new versus legacy models on user interaction

- Rollback Strategies: Automatically roll back to stable revisions on failure

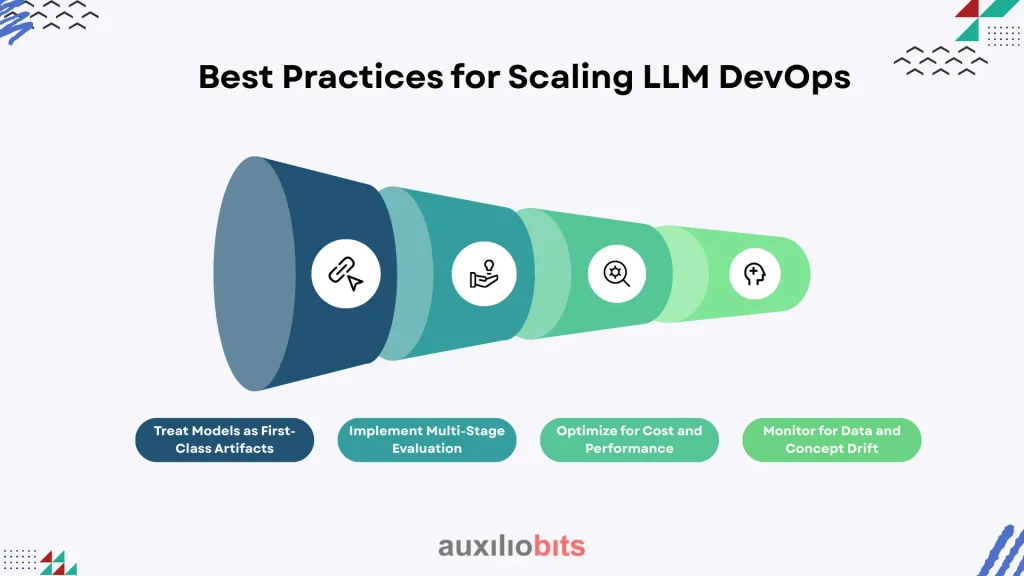

Best Practices for Scaling LLM DevOps

Below are best practices for scaling LLMOps, informed by current industry insights and tailored to address computational, operational, and ethical challenges.

Treat Models as First-Class Artifacts

Utilize a Model Registry (e.g., MLflow) to preserve lineage from training data to deployed endpoints. This simplifies auditability and debugging when things break.

Implement Multi-Stage Evaluation

Hybridize automated metrics with human-in-the-loop evaluation. For instance, human evaluators can test a sample of outputs before full deployment.

Optimize for Cost and Performance

Use techniques like quantization (trading off model accuracy) and dynamic batch sizes. NVIDIA Triton Inference Server automates batching, improving GPU utilization.

Monitor for Data and Concept Drift

Enforce detectors to monitor changes in input data distribution (data drift) or waning model relevance (concept drift). AI drift detection modules may trigger retraining pipelines accordingly.

Conclusion

Scaling large language models requires transforming DevOps to include models, prompts, retrieval pipelines, and ethical guardrails as class artifacts. Applying exact version control, multi-tiered automated and human-in-the-loop validation, incremental rollouts, and ongoing performance and bias monitoring allows organizations to minimize risk and preserve agility. These customized CI/CD practices provide scalable, reliable, and auditable AI operations.