Key Takeaways

- Secure communication between AI agents is essential to protect customer data, business logic, and system integrity from cyber threats and unauthorized access.

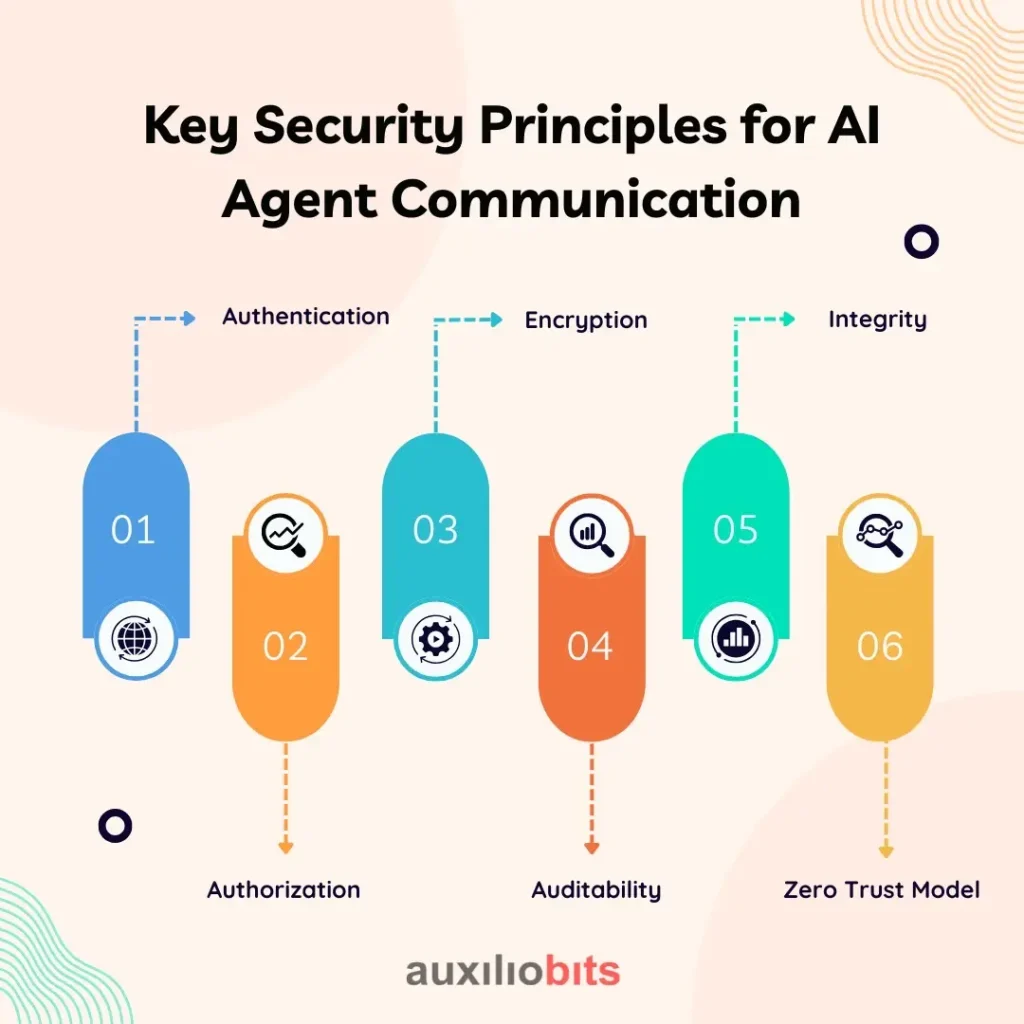

- Core security principles—authentication, authorization, encryption, auditability, integrity, and zero trust—must guide every AI agent interaction in enterprise environments.

- Enterprise-grade architecture patterns, such as API gateways, service meshes, and secure message queues, help enforce communication security across distributed AI systems.

- Zero Trust Network Architecture and blockchain-based logging defend against internal threats, unauthorized movement, and data tampering.

- Security measures such as RBAC, secrets management, data masking, and threat detection enhance trust, compliance, and operational resilience.

Companies of all types use artificial intelligence agents. Considering the numerous benefits they offer, firms use AI agents to make timely decisions and interact with users and systems. One essential aspect is taken for granted: secure communication. AI agents may help firms connect to databases, communicate with third-party services, and talk to each other. In all of these situations, data is shared between several components. By any chance, if the communication is not secure, it becomes very easy for hackers to target. Henceforth, securing AI agents’ communication is of utmost importance. It helps in protecting sensitive information. In addition, it also ensures that all the information related to customers is in safe hands. If information is not protected, attackers could change the message between agents.

To avoid this, firms must follow strong security practices. First, it is paramount to use encrypted communication. Protocols like HTTPS and TLS ensure that the data sent among systems or agents is safe. No outsider should have access to read the data. Second, use authorization and authentication. Every agent should prove who it is before accessing a system and service. This ensures that only reliable agents interact with the network.

Also, consider using secure API gateways and firewalls. These tools help monitor, filter, and control data flows. They block suspicious activities and stop unauthorized access. Another good practice is to log all agent communications. This helps detect security issues early and provides an audit trail.

Finally, use a zero-trust model. This means never automatically trust any agent or system inside your network. Every request must be verified.

Also read: AI Agent Orchestration on Azure: Architecture & Tips.

Why Secure Communication Between AI Agents Matters?

AI agents are becoming powerful tools in modern enterprises. They can perform many manual, slow, and error-prone tasks. Today’s AI agents can trigger automated workflows, access sensitive customer data, interact with APIs and systems, and make real-time decisions. These abilities make them valuable, but they also introduce new security challenges.

When AI agents communicate with each other or with other systems, they exchange essential data. This can include customer records, financial details, internal business logic, or access credentials. If this data is not secured correctly, it becomes an easy target for cyber attackers. A single weak link in communication can lead to serious consequences.

Here’s what’s at risk:

1. Data breaches

If attackers can intercept communication between AI agents, they may steal personal information, trade secrets, or business-critical data. This can harm the business’s reputation and lead to legal action.

2. Unauthorized system access

If agent identities are not verified, malicious agents can impersonate real ones and gain access to systems they should not control. This can lead to system manipulation or sabotage.

3. Compliance violations

Strict regulations like GDPR, HIPAA, or PCI-DSS govern many industries. Unsecured data communication can lead to non-compliance, which results in hefty fines and penalties.

4. Loss of customer trust

Security issues reduce customer confidence. Users who feel their data is unsafe may stop using your services or switch to competitors.

That’s why security is not a “nice to have”—it’s essential. Every communication between AI agents must be protected using encryption, authentication, and monitoring tools. AI agents should only exchange data after verifying each other’s identity, and all information should travel through secure channels. Role-based access, audit trails, and zero-trust principles strengthen security posture.

Key Security Principles for AI Agent Communication

Before designing secure architectures for AI agent communication, it’s essential to understand the foundational security principles that guide how agents should interact. These principles are necessary to ensure trust, data protection, and system resilience in any enterprise environment.

1. Authentication

Authentication is the process of verifying the identity of the agents and systems involved in communication. Just like users log in with usernames and passwords, AI agents must prove who they are before interacting with other services or systems. This can be done using secure credentials like API keys, tokens, or digital certificates. Without authentication, malicious agents could impersonate trusted ones and gain access to critical systems.

2. Authorization

After authentication, authorization determines what actions an agent is allowed to perform. Even if an agent is recognized as valid, it should only access the data or services for which it’s been granted permission. Role-based access control (RBAC) or attribute-based access control (ABAC) can help define these permissions. This prevents over-privileged agents from causing harm or leaking sensitive data.

3. Encryption

Encryption protects data from being read or altered by unauthorized parties. All communication between AI agents should be encrypted using secure protocols like TLS (Transport Layer Security). This ensures it cannot be understood even if the data is intercepted. Encryption should also be applied to data at rest — for example, when an agent stores a file or message for later use.

4. Auditability

Keeping a record of all agent communications and activities is crucial. Audit logs help track who did what and when. These logs can be used for troubleshooting, performance monitoring, and forensic analysis during security breaches. Logs also help meet compliance requirements and maintain transparency.

5. Integrity

Data integrity ensures that messages are not changed during transit. If a message is tampered with or corrupted, the receiving agent should be able to detect this. Techniques like message signing, checksums, and hash functions help verify that the data received is exactly what was sent.

6. Zero Trust Model

The Zero Trust approach assumes no implicit trust, even within an internal network. Every request between agents must be verified, regardless of where it comes from. This model uses strict identity verification, access controls, and continuous monitoring to secure all communications.

Common Architecture Patterns to Secure Agent Communication

Let’s look at enterprises’ architecture patterns to protect communication between AI agents.

1. API Gateway with Authentication & Rate Limiting

Use Case:

When AI agents need to access or expose APIs.

How It Works:

- Agents communicate through a central API Gateway.

- The gateway enforces OAuth 2.0, JWT tokens, or API keys.

- It limits access using rate limiting to prevent abuse.

- All data exchanged is TLS encrypted.

Benefits:

- Centralized security

- Fine-grained access control

- Easy monitoring and logging

2. Service Mesh with Mutual TLS (mTLS)

Use Case:

In microservices environments, agents run as distributed services.

How It Works:

- A service mesh (like Istio or Linkerd) manages agent communication.

- It automatically establishes mTLS connections between services.

- It handles service discovery, retries, and load balancing.

Benefits:

- Encrypted service-to-service communication

- Identity verification of each agent/service

- Observability and traffic control

3. Message Queue with Encrypted Channels

Use Case:

When agents communicate asynchronously using queues (e.g., Kafka, RabbitMQ).

How It Works:

- Agents send/receive messages through a secure message queue.

- Messages are encrypted using AES or other enterprise-grade algorithms.

- Access to the queue is controlled via IAM roles and policies.

Benefits:

- Secure, decoupled communication

- Message durability and retry support

- Scalability

4. Zero Trust Network Architecture (ZTNA)

Use Case:

When AI agents operate across cloud, edge, and hybrid networks.

How It Works:

- Every device, agent, and user must verify identity before connecting.

- Network segmentation isolates agents by function or sensitivity.

- Continuous monitoring is used to detect and block anomalies.

Benefits:

- Prevents lateral movement in case of breach

- Adapts to hybrid and remote-first environments

- Enhanced compliance and data protection

5. Blockchain or Distributed Ledger for Trusted Logging

Use Case:

When sensitive decisions or transactions are made between agents.

How It Works:

- Communication logs are stored in a tamper-proof ledger.

- Blockchain ensures immutability and traceability.

- Smart contracts can govern access or actions.

Benefits:

- Auditability and transparency

- Reduces dispute risk

- Strengthens compliance in regulated industries

Additional Security Measures

- Role-Based Access Control (RBAC): Assign roles to agents and restrict access based on roles.

- Secrets Management: Use tools like Azure Key Vault, AWS Secrets Manager, or HashiCorp Vault to store API keys, credentials, and tokens securely.

- Threat Detection Tools: Integrate tools like Microsoft Defender for Cloud, AWS GuardDuty, or anomaly detection models.

- Data Masking & Redaction: When agents process personal or sensitive information, ensure data is masked before use or transfer

Final Thoughts

Securing AI agent communication isn’t just a technical need—it’s a business imperative. As AI agents become more capable and integrated into core operations, potential risks also grow. Organizations can build trust, compliance, and resilience by following enterprise-grade architecture patterns and core security principles.